What's

Three Benefits of Explainable Artificial Intelligence

Explainable Artificial Intelligence: From Black Boxes to Transparent Models

Artificial intelligence will increasingly interfere in our lives. However, many people do not trust artificial intelligence and are reluctant to leave even trivial decisions to it. One of the reasons is that we often cannot explain the decisions of artificial intelligence because complex models behave like black boxes.

One way to increase trust in artificial intelligence is that the people will be able to understand how AI makes its decisions and why it acts a certain way. At present, AI is still a big mystery to the general public, but better explainability and transparency of models could significantly increase the acceptance of artificial intelligence.

In the second part of this series of articles on the topic of explainable and transparent artificial intelligence (XAI), you will learn:

- why it is important and beneficial to know how the model made its decisions,

- why explanations of artificial intelligence must be tailored to the person to whom they are addressed.

The ability to understand how models made their decisions has several benefits:

1. Increasing the trustworthiness and acceptance of artificial intelligence in society

Artificial intelligence as such and the predictions it provides will naturally be more accepted in society if, in addition to the prediction itself, it will be able to provide how it came to the prediction. Let’s have an example – a bank does not approve a loan to a young person based on an AI recommendation. It would be appropriate to know the reason behind such recommendation, which probably influenced the subsequent decision of the bank employee. Was it because the client has a low income and therefore he or she has to find a better paid job or reduce their loan demands? Or was it because the person was employed for a short time and if they waited a few more months, the loan would be approved?

This example proves that the decisions of artificial intelligence can have a significant impact on human life. This only emphasizes the importance of interpretability and explainability of artificial intelligence.

2. Discovering new knowledge in areas where we already have a lot of data (e.g. physics, chemistry)

If we can learn why AI has made predictions the way it did in these areas, we can potentially discover relationships we have not seen before. While a person usually teaches AI to make good predictions, it is possible that AI achieves comparable or even better results than a human. Then it is appropriate to ask – did artificial intelligence come to knowledge that we as humans have missed? It is a kind of reverse engineering, where we (as people) try to understand how a system that works well actually works.

You may be familiar with the example of the AlphaGo1 algorithm. The algorithm came up with its own, formerly unknown strategies. Using these strategies, it was the first AI to defeat the human champions in the game Go. What if we could do something like that in medicine? What if an algorithm discovered connections in the data between diseases and areas or processes in the human body that we couldn’t see until now? Explainability methods could be the way to discover them.

3. Overlap with ethics and detection of biases, prejudices or backdoors encoded in artificial intelligence models

The most widely used models of artificial intelligence currently use examples provided by humans in the learning process. For example, if we want to create a model that will simulate a discussion on Twitter, the most natural way to achieve this is to “show” the model a large number of real Twitter conversations in the learning process.

However, as a well-known experiment carried out by Microsoft2 showed, the model not only learnt how to use the English language and formulate meaningful sentences from real tweets, but it also adopted something extra – our biases and prejudices. Chatbot Tay became a racist.

Explainability methods can be an important tool in early detection of hidden biases encoded in the model. If we know what the prediction of the model is based on and what knowledge it has learnt from the training data provided by humans, we have a better chance to detect similar defects.

So far, we have talked about biases and prejudices that have unintentionally been transferred to the model. However, the current topic is the fight against intentional insertion of the so-called backdoor to the artificial intelligence model, which allows the attacker to influence the behavior of the model. For example, modern vehicles use artificial intelligence to recognize traffic signs. Especially in the case of autonomous vehicles, this is a critical functionality – whether the STOP sign is correctly recognized can mean the difference between safe driving and a car accident.

Imagine that a backdoor is encoded in a model that recognizes traffic signs. The backdoor just needs to change a small detail on the STOP traffic sign. For example, add a green marker to its upper right corner. The model would suddenly start to confuse such road signs with the “Main Road” sign. Detection of a backdoor is not easy at all, because the model will behave pathologically only under very specific conditions. Explainability and interpretability have the potential to help detect such targeted attacks. For example, if we discover that the model is too focused on the upper right corner of the image (by using some of the explainability methods),, we can make the model more robust to such vulnerability.

The way the same piece of information is presented has a dramatic impact on its understanding

When it comes to explainable artificial intelligence, it is important to think about who the explanation is addressed to. The degree to which a model and its predictions are understandable to humans always depends on the audience. It is different to explain artificial intelligence to scientists and to end users (e.g. people who use a smart home assistant). Therefore, the explanation and interpretation should also take the audience into account and they should be literally tailored to them.

This is one of the reasons why there is no universal explainability method that can be applied in every context.

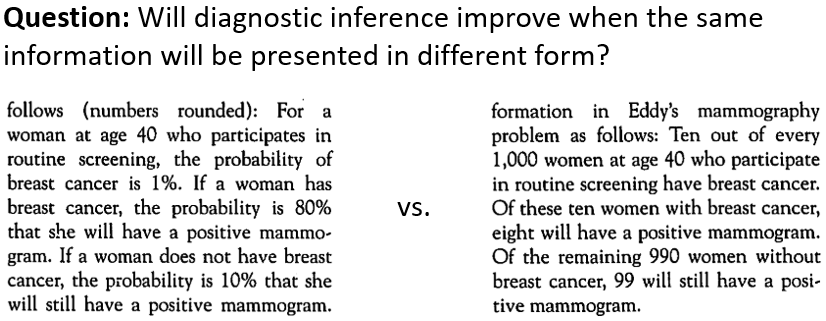

An example of how different forms of providing the same information can affect their understanding by the audience is also provided by a study by Hoffrage a Gigerenzer3. The aim was to find out whether the conclusions reached by doctors would be different if we presented them the same information in different forms. According to the doctors, will the probability that a patient has a disease change if we present patients’ data in different ways?

2. In the right column, the information is presented as natural frequencies.

Question: Will diagnostic inference improve when the same information will be presented in different forms?

Method 1, probabilistic representation: “For a woman at age 40 who participates in routine screening, the probability of breast cancer is 1%. If a woman has breast cancer, there is an 80% probability that she will have a positive mammogram. If a woman does not have breast cancer, the probability that she will still have a positive mammogram is 10%. Imagine a woman from this age group with a positive mammogram. What is the probability that she actually has breast cancer?”

Method 2, natural frequencies: “Ten out of every 1,000 women at age 40 who participate in routine screening have breast cancer. Of these ten women with breast cancer, eight will have a positive mammogram. Of the remaining 990 women without breast cancer, 99 will still have a positive mammogram. Imagine a group of 40-year-old women with positive mammograms. How many of them actually have breast cancer?”

Compared to probabilistic representation, when the information was provided as natural frequencies, the accuracy with which doctors answered the question (how many women actually have breast cancer) in the model example increased from 10% to 46%. Taking this fact into consideration, we can conclude that it is much easier for a person to work with specific numbers than with probability. Interestingly, according to the authors of the study, most of the medical literature from that period used the method of probabilistic representation for explanations.

Conclusion of the Second Part

In the second part of the series, we talked about three important benefits of explainable artificial intelligence:

- Artificial intelligence and its use will be more accepted in society if, in addition to understanding the prediction of artificial intelligence itself, we can explain why the prediction was as it was.

- By knowing exactly what the decisions of artificial intelligence are based on, we can discover relationships and patterns we have not seen before. Thus, it may happen that with the help of AI we will discover new knowledge in areas where we have a large amount of data.

- Useful knowledge is not the only thing artificial intelligence has adopted from us – unfortunately, it has also adopted our biases and prejudices. Explainable methods can help us to discover hidden biases encoded in artificial intelligence models and combat them effectively.

We also explained why it is extremely important to adequately set up how the prediction of an AI model and its explanation will be presented to humans. The way information is presented has a huge impact on its correct understanding. Therefore, when creating AI models and explainability methods, we must think about who the explanation is intended for and whether the chosen way of presentation is understandable to the audience.

If you want to learn more about the topic of explainable artificial intelligence, do not miss the other parts of the series. In the third part we will deal with transparent models and the so-called black box models. In addition, we will have a look at the methods for interpreting artificial intelligence models and explaining their predictions.

The PricewaterhouseCoopers Endowment Fund at the Pontis Foundation supported this project.

Resources:

[1] AlphaGo Zero: Starting from scratch, web: https://deepmind.com/blog/article/alphago-zero-starting-scratch

[2] Chatbot Tay. Web: https://spectrum.ieee.org/in-2016-microsofts-racist-chatbot-revealed-the-dangers-of-online-conversation

[3] Hoffrage U, Gigerenzer G. Using natural frequencies to improve diagnostic inferences. Acad Med. 1998 May;73(5):538-40. doi: 10.1097/00001888-199805000-00024. PMID: 9609869.