What's

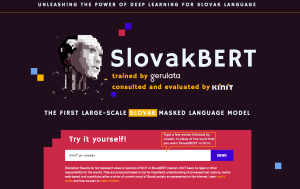

Introducing the first public large neural Slovak language model – SlovakBERT

KInIT and Gerulata Technologies introduce SlovakBERT, a new language model for Slovak, which will help improve the automatic processing of texts written in Slovak.

Neural language models have lately been the state-of-the-art technology for natural language processing (NLP). Researchers have been able to improve results for many NLP tasks with these models and they also serve as a technological foundation for applications such as Google Search or Google Translate, which are used by billions of people every day. Such models were initially created mainly for English and subsequently for widely used languages, such as Chinese or French. Models for smaller languages such as Czech and Polish occurred later. Even multilingual models are available nowadays.

Today we present the first such modern (with the so-called transformers architecture) language model for Slovak – SlovakBERT1. The model, trained by our partner, Gerulata Technologies, was consulted and scientifically evaluated by our NLP team. SlovakBERT learned Slovak from about 20 GB of Slovak text collected from the Web. These data are a snapshot of what Slovak language looks like for the model.

Try it out!

You can explore more about SlovakBERT and experiment how it works on. Just visit the SlovakBERT website and try it out for yourself.

Training SlovakBERT was not an easy task, it required almost two weeks of calculations on a powerful computational server. By comparison, a computer with a mid-range graphics card might take years to finish the computations, a regular work laptop might take perhaps decades. SlovakBERT is now open to the world and accessible2 to the NLP community. We believe that this step will improve the level of automated Slovak language processing for researchers, companies, but also for the general public.

As researchers, we verified the potential of SlovakBERT and tested how well it works for various tasks. We have found that it achieves excellent results in grammatical analysis, semantic analysis, sentiment analysis or document classification. We described the results of the experimentation in the publicly available article SlovakBERT: Slovak Masked Language Model. The model proved to be so good that we are already involving it in projects with our partners from industry and it might soon appear in the first deployed applications, for example in the upcoming system for analysing sentiment of customer communication on public social network profiles.

We are also aware of the possible pitfalls of such a model. Since it is trained on text available on the Web, there is no filtering mechanism by which we can verify the suitability of this text. SlovakBERT therefore also learned from texts containing vulgarisms, conspiracies, prejudices, stereotypes and many other negative phenomena that Slovak language users produced on the Web. It is therefore a certain mirror of everything that happens in society. In the near future, we plan to research this issue – how to identify various prejudices in language models and, if necessary, suppress them.

1 The name follows the original BERT model from the Google, which was trained for English. It is an abbreviation for “Bidirectional Encoder Representations from Transformers”, ie the technology used for deep learning of the neural network.

2 SlovakBERT at GitHUB

3 Matúš Pikuliak, Štefan Grivalský, Martin Konôpka, Miroslav Blšták, Martin Tamajka, Viktor Bachratý, Marián Šimko, Pavol Balážik, Michal Trnka, Filip Uhlárik. 2021. SlovakBERT: Slovak Masked Language Model