What's

How do professionals view the veracity of articles on Facebook and how high school students? Take a look at the results of our eye tracking study

There is so much misinformation on the web today that evaluating the veracity of news can sometimes be similar to evaluating stories in the “Beyond belief” series. Is it possible to make this process more efficient and automated in some way? How will monitoring the behavior of laymen and professionals help us evaluate the veracity of resources? These questions were also asked by our researchers Jakub Šimko, Matúš Tomlein, Róbert Móro and Mária Bieliková in the latest study “A study of fake news reading and annotating in social media context” published in the journal New Review of Hypermedia and Multimedia.

How did our eyetracking go?

The researchers did not make it easier for the participants to evaluate the articles. 44 laymen from the ranks of high school students and 7 professionals working in the field of journalism or fact checking, were made available 50 articles (mostly taken from existing media) in an application imitating the real Facebook. However, participants assessed the truth only on the basis of the content of the articles. No authors, resources, visual cues, or lots of likes and comments on posts. How participants responded to this challenge was monitored using eye tracking (an eye tracking method).

With lay researchers, they were particularly interested in how they tend to behave when “consuming” fake news on social media and how their interests and opinions affected it. They also observed how people who are evaluated as more successful behave and what they notice in the content of articles. If any patterns of behavior are found, this knowledge may be useful for the automatic detection of misinformation.

“Facebook” contained articles on 11 topics with some controversy: food quality, weight loss, Muslims in the EU, the pharmaceutical industry, LGBT rights, right-wing extremism, vaccinations, UFOs, global warming, deforestation and migrants. True and false articles were represented in each topic, as well as articles with opposing views on the issue.

What did the views of high school students show us?

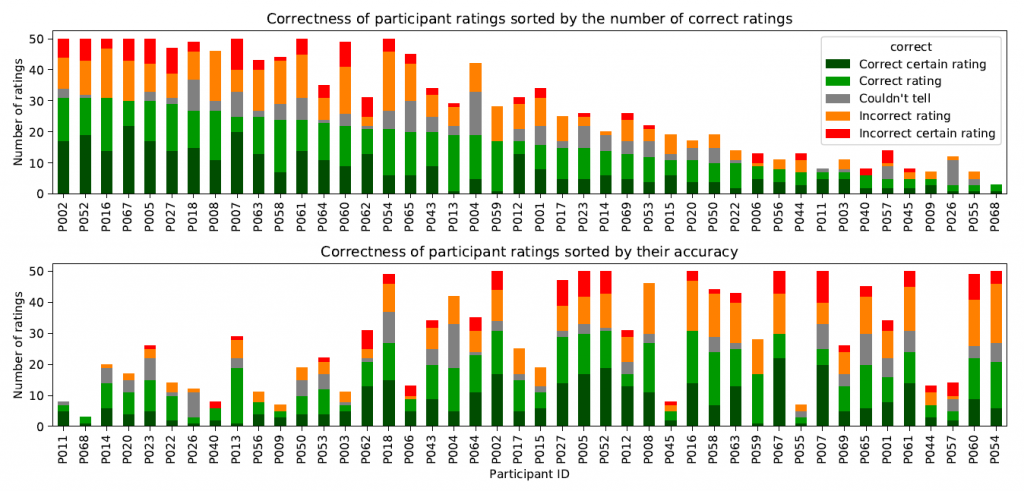

So what distinguished successful students from those who failed in the first part of the experiment? For example, the successful ones took more time to evaluate content (although they verified fewer articles, but did better). On the contrary, if lay participants spent more time reading headlines and information directly only on “Facebook” (they relied mainly on this source of information), they had worse results. It was also interesting that although the strength of interest corresponded to the strength of the participants’ opinion on most topics, this was not the case of some topics (such as same-sex marriages). We cannot interpret this result with certainty, but it could point to the influence of students’ opinion by external factors.

How successful were the professionals in the experiment?

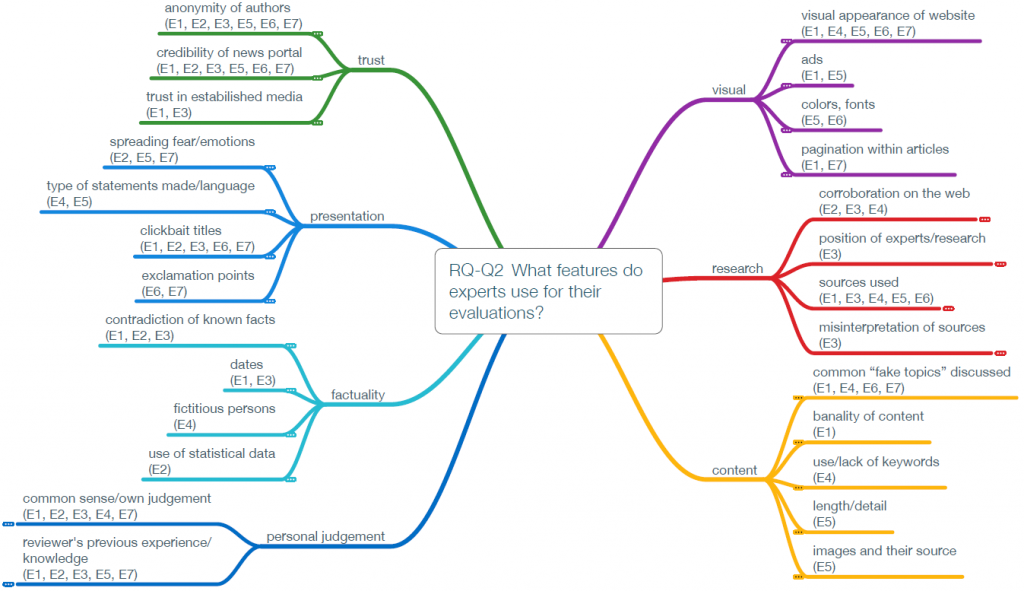

The second part of the experiment looked at how experts would act in evaluating the veracity of articles purely on the basis of content. Although it was found that they had done better than high school students, even professionals could not properly evaluate up to 30% of articles in this way. A more significant improvement could be observed only with true articles (79% success rate vs. 58%). For fake articles, experts lacked metadata the most and other information to validate the article, which they use in their work. It is mainly about the possibility to verify the reputation of the author or publisher, anonymity is a strong sign of untrustworthy content for them. They would also need to verify factual data on the web – they usually verify dates, statistics, but also pictures.

In the experiment, participants could only rely on the use of a specific content language, such as stickers, certain keywords, author’s judgements and opinions, lack of factual information or arousing fear (or they could rely on their own judgment). When evaluating fake news, they said they lacked visual characteristics such as the number of ads, bold colors, large and bold fonts, or the length and paging of the content.

Experts considered the most difficult articles to recognize

- Combination of different sources and their subsequent misinterpretation

- Mixing true content with a small amount of fakery

- Articles without a clear opinion, which leave it to the reader to form their own opinion

- Media that cannot be verified by the publisher or portal owner

What can the results of research teach us?

Although the veracity of some articles can be judged on the basis of the characteristics of the content, even experts cannot do so with certainty. Artificial intelligence can assist them, for example, by analyzing sentiment or searching for keywords. However, article sources and metadata, such as authors or publishers, are very important for expert news evaluation. Visual features are also important, but as other credibility researches have shown, the design of the site is precisely the characteristic that professionals and laymen notice when assessing credibility.

Research into the news evaluation by lay people only confirmed the importance of a deeper analysis of the content (the more successful participants were those who spent more time evaluating the content). However, this does not apply to the analysis of article titles in the Facebook application itself. If the students relied only on it, they evaluated the truth less successfully.