AI-Auditology: Social Media AI Algorithms Auditing

The goal of the AI-Auditology project is to fundamentally change the oversight of social media AI algorithms, like recommender systems or search engines, and their tendencies to spread and promote harmful content. The main ground-breaking idea of this proposal is a novel paradigm of model-based algorithmic auditing.

The project serves a noble purpose and will have a great positive societal impact on the European (at the very least) online media environment. Currently, media regulators have no real tool to quantitatively investigate whether big tech follows on their self-regulatory promises or adheres to relevant legislation on tackling recommender system biases, mitigating toxic content, or preventing misinformation spread. With the new methods and supporting software platform built, the public will receive a powerful watchdog tool, fully in line with European values.

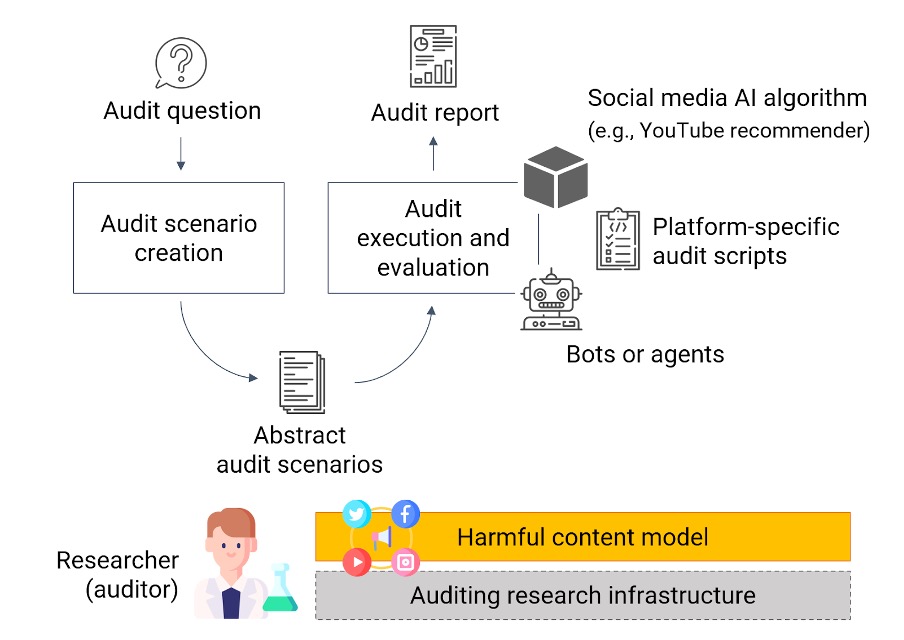

The social media algorithmic auditing is a process, in which the audited platform is stimulated by behavior generated by automated agents (posing as platform users), while the platform’s responses (e.g. recommendations) are collected and analyzed. The model-based algorithmic auditing refers to an innovative application of model-based design for supporting or even automating individual steps of the auditing process (i.e., creation of audit scenarios, audit execution and evaluation).

The underlying harmful content model provides a simplified and partial representation of harmful content in a social media environment and of user behavior around such content (e.g., what kind of users and how exactly they interact with different instances of harmful content). It is built and continuously maintained from outcomes of research studies and public opinion polls, from harmful content combating activities (e.g., fact-checking) as well as from the real-world social media data. Such a model has not been researched so far and besides revolutionizing algorithmic auditing, it would have a big multidisciplinary impact on other research areas as well.

Harmful content model is consequently used as an integral part of the auditing process – first and foremost, to assist human experts by generating abstract audit scenarios, which are translated to platform-specific scripts at execution time. The whole auditing process is supported by an auditing research infrastructure utilizing advanced techniques from machine learning and natural language processing.

The proposed model-based algorithmic auditing and next-generation audits fall into a so-far under-researched area of algorithmic auditing. The project will therefore contribute to a new and impactful research direction of model-based algorithmic auditing, which addresses difficult challenges hampering further progress in auditing social media AI algorithms. It is also in line with ongoing EU legislation initiatives demanding independent social media oversight and impacts all involved stakeholders, including social media platforms’ users.