What's

KInIT at AAAI 2025 Conference

The 39th edition of the annual AAAI Conference on Artificial Intelligence took place in late February in Philadelphia, Pennsylvania. We are proud that our PhD students work on cutting-edge topics that are accepted by the international research community. In this case, we presented a paper by Matej Cief, Branislav Kveton (Adobe) and Michal Kompan – Cross-Validated Off-Policy Evaluation.

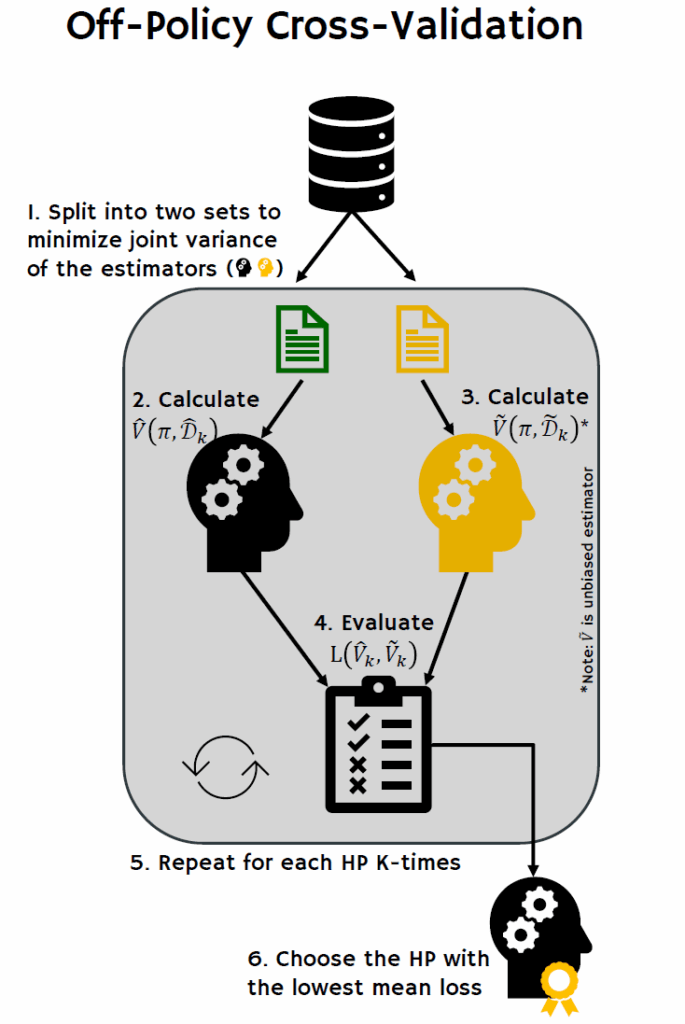

While cross-validation is standard in supervised learning, it’s been considered infeasible for off-policy evaluation. Current approaches rely heavily on theoretical guidance, which is limited or non-existent for many methods. There’s no general solution for selecting between different off-policy evaluation estimators or tuning their hyperparameters. To address these limitations, we proposed an Off-Policy Cross-Validation method. Just as in supervised learning, where we estimate unknown true values from noisy samples, in off-policy evaluation, we can estimate a policy’s value using data collected from a different policy. Each sample provides an unbiased (though noisy) glimpse of the true value we’re seeking. While we can’t directly minimise the error between our estimate and the unknown true value, we can minimise the error between our estimate and an unbiased validator on held-out data. Mathematical analysis shows this is a reliable proxy for selecting the best estimator, giving us a practical way to improve off-policy evaluation (see the paper).

The conference attracted 12,957 submissions this year, which resulted in 3029 accepted papers (23.4% acceptance rate). Besides the main conference, 49 workshops and 2 conferences (IAAI, EAAI) were co-located with the event.

Among various keynotes presented during the conference, we would like to highlight the talk by Stuart Russel, entitled “Can AI Benefit Humanity?”. He argued that the current LLM is a puzzle to achieve General AI. He also elaborated more on whether we will succeed with AGI before 2030. For instance, he pointed out that the amount of money spent on AI research right now is approximately 10x the Manhattan Project. On the contrary, there is a risk of an AI “mega-winter” as the AI hype bubble can burst. Despite the time forecast, the main question remains the same: How do we retain power over entities more powerful than us, forever? A possible direction is to design AI systems as assistance game solvers, which exhibit deference, minimally invasive behaviour and willingness to be switched off (which should address the uncertainty of humans’ interests). Last but not least, he stressed the need to work on strategies for the coexistence of humans and AI.

The conference also featured well-known Andrew Ng with a keynote focused on “AI, Agents and Applications”. Andrew pointed out 5 important AI trends. 1. Fast prototyping – generative AI makes it possible to build prototypes very efficiently (aka move fast and be responsible). 2. Voice stack – building voice applications is much easier than a year ago (but there are still technical hurdles). 3. Visual AI – the image processing revolution is coming and will enable new visual applications in manufacturing. 4. Data gravity is decreasing – data tends to attract other data and compute, but in GenAI settings, sending data far away for processing is feasible. 5. Data engineering – its importance is rising, particularly in the management of unstructured data (text, images, video, audio).

The outputs presented at AAAI are the results of our research projects HERMES and DisAI. HERMES project aims at researching and proposing state-of-the-art recommender system methods for Trustworthy Multi-objective and multi-stakeholder (MOS) environments with emphasis on operationalisation of the trustworthiness in the context of the MOS recommender systems. DisAI focuses on disinformation combating, which we consider one of the most important societal challenges to tackle. Simultaneously, the project aims to improve the scientific excellence of KInIT in the selected areas of AI.

You can find more information about the proposed Off-Policy Cross-Validation method in our paper or poster.